CircuitNet: A Brain-Inspired Neural Network Architecture for Enhanced Task Performance Across Diverse Domains

The success of ANNs stems from mimicking simplified brain structures. Neuroscience reveals that neurons interact through various connectivity patterns, known as circuit motifs, which are crucial for processing information. However, most ANNs only model one or two such motifs, limiting their performance across different tasks—early ANNs, like multi-layer perceptrons, organized neurons into layers resembling synapses. Recent neural architectures remain inspired by biological nervous systems but lack the complex connectivity found in the brain, such as local density and global sparsity. Incorporating these insights could enhance ANN design and efficiency.

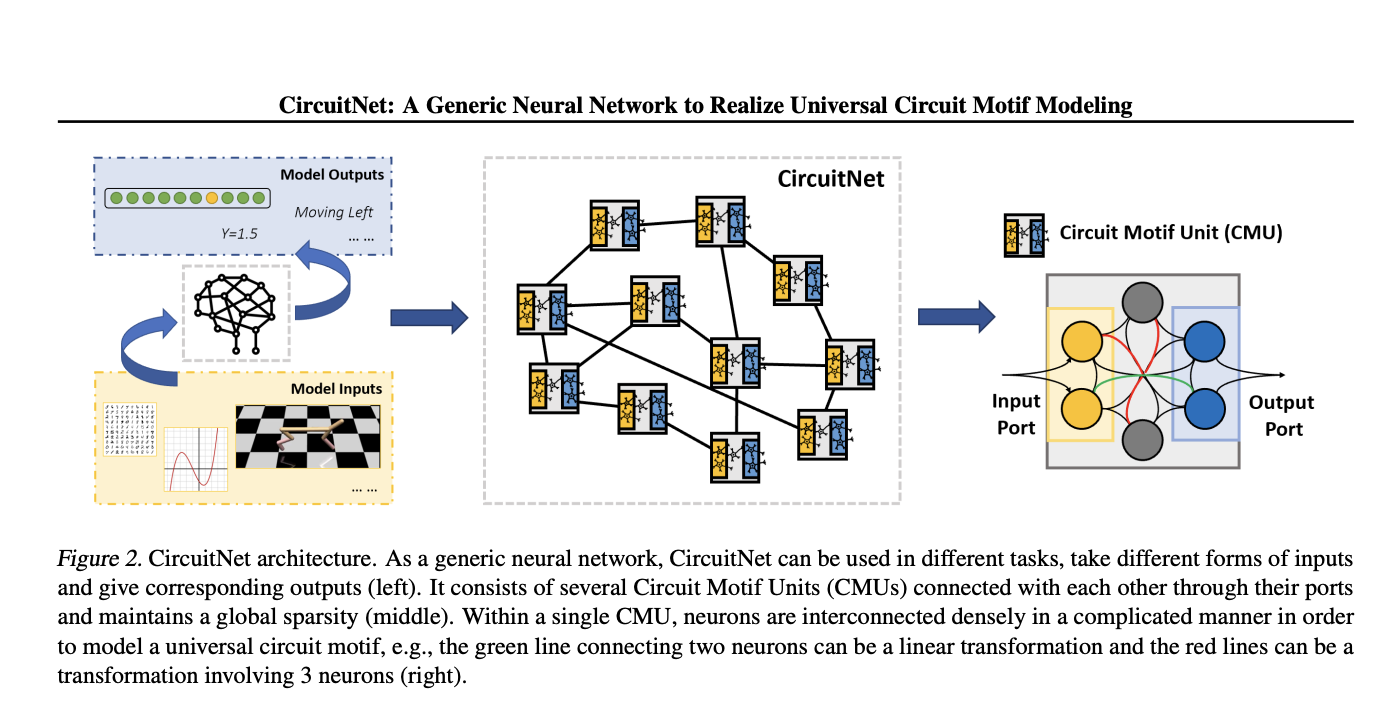

Researchers from Microsoft Research Asia introduced CircuitNet, a neural network inspired by neuronal circuit architectures. CircuitNet’s core unit, the Circuit Motif Unit (CMU), consists of densely connected neurons capable of modeling diverse circuit motifs. Unlike traditional feed-forward networks, CircuitNet incorporates feedback and lateral connections, following the brain’s locally dense and globally sparse structure. Experiments show that CircuitNet, with fewer parameters, outperforms popular neural networks in function approximation, image classification, reinforcement learning, and time series forecasting. This work highlights the benefits of incorporating neuroscience principles into deep learning model design.

Previous neural network designs often mimic biological neural structures. Early models like single and multi-layer perceptrons were inspired by simplified neuron signaling. CNNs and RNNs drew from visual and sequential processing in the brain, respectively. Other innovations, like spiking neural and capsule networks, also reflect biological processes. Key deep learning techniques include attention mechanisms, dropout and normalization, parallel neural functions like selective attention, and neuron firing patterns. These approaches have achieved significant success, but they cannot genneedly model complex combinations of neural circuits, unlike the proposed CircuitNet.

The Circuit Neural Network (CircuitNet) models signal transmission between neurons within CMUs to support diverse circuit motifs such as feed-forward, mutual, feedback, and lateral connections. Signal interactions are modeled using linear transformations, neuron-wise attention, and neuron pair products, allowing CircuitNet to capture complex neural patterns. Neurons are organized into locally dense, globally sparse CMUs, interconnected via input/output ports, facilitating intra- and inter-unit signal transmission. CircuitNet is adaptable to various tasks, including reinforcement learning, image classification, and time series forecasting, functioning as a general neural network architecture.

The study presents the experimental results and analysis of CircuitNet across various tasks, comparing it with baseline models. While the primary goal wasn’t to surpass state-of-the-art models, comparisons are made for context. The results show that CircuitNet demonstrates superior function approximation, faster convergence, and better performance in deep reinforcement learning, image classification, and time series forecasting tasks. In particular, CircuitNet outperforms traditional MLPs and achieves comparable or better results than other advanced models like ResNet, ViT, and transformers, with fewer parameters and computational resources.

In conclusion, the CircuitNet is a neural network architecture inspired by neural circuits in the brain. CircuitNet uses CMUs, groups of densely connected neurons, as its basic building blocks capable of modeling diverse circuit motifs. The network’s structure mirrors the brain’s locally dense and globally sparse connectivity. Experimental results show that CircuitNet outperforms traditional neural networks like MLPs, CNNs, RNNs, and transformers in various tasks, including function approximation, reinforcement learning, image classification, and time series forecasting. Future work will focus on refining the architecture and enhancing its capabilities with advanced techniques.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.

Comments are closed, but trackbacks and pingbacks are open.