MIT breakthrough could transform robot training

MIT researchers have developed a robot training method that reduces time and cost while improving adaptability to new tasks and environments.

The approach – called Heterogeneous Pretrained Transformers (HPT) – combines vast amounts of diverse data from multiple sources into a unified system, effectively creating a shared language that generative AI models can process. This method marks a significant departure from traditional robot training, where engineers typically collect specific data for individual robots and tasks in controlled environments.

Lead researcher Lirui Wang – an electrical engineering and computer science graduate student at MIT – believes that while many cite insufficient training data as a key challenge in robotics, a bigger issue lies in the vast array of different domains, modalities, and robot hardware. Their work demonstrates how to effectively combine and utilise all these diverse elements.

The research team developed an architecture that unifies various data types, including camera images, language instructions, and depth maps. HPT utilises a transformer model, similar to those powering advanced language models, to process visual and proprioceptive inputs.

In practical tests, the system demonstrated remarkable results—outperforming traditional training methods by more than 20 per cent in both simulated and real-world scenarios. This improvement held true even when robots encountered tasks significantly different from their training data.

The researchers assembled an impressive dataset for pretraining, comprising 52 datasets with over 200,000 robot trajectories across four categories. This approach allows robots to learn from a wealth of experiences, including human demonstrations and simulations.

One of the system’s key innovations lies in its handling of proprioception (the robot’s awareness of its position and movement.) The team designed the architecture to place equal importance on proprioception and vision, enabling more sophisticated dexterous motions.

Looking ahead, the team aims to enhance HPT’s capabilities to process unlabelled data, similar to advanced language models. Their ultimate vision involves creating a universal robot brain that could be downloaded and used for any robot without additional training.

While acknowledging they are in the early stages, the team remains optimistic that scaling could lead to breakthrough developments in robotic policies, similar to the advances seen in large language models.

You can find a copy of the researchers’ paper here (PDF)

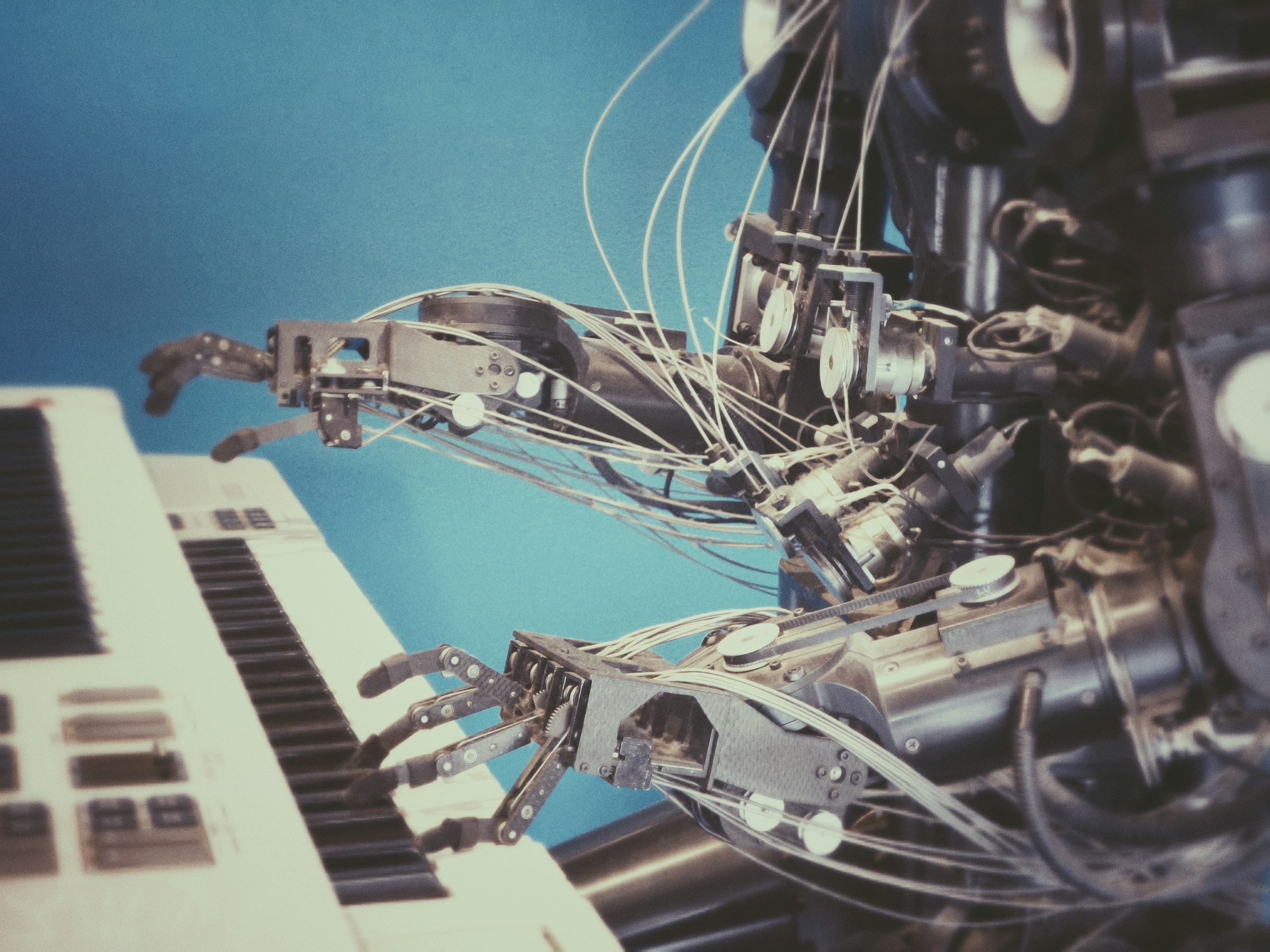

(Photo by Possessed Photography)

See also: Jailbreaking AI robots: Researchers sound alarm over security flaws

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Comments are closed, but trackbacks and pingbacks are open.