The high and low level context behind Nvidia CEO Jensen Huang’s GTC 2025 keynote | Dion Harris interview

Jensen Huang, CEO of Nvidia, hit a lot of high concepts and low-level tech speak at his GTC 2025 keynote speech last Tuesday at the sprawling SAP Center in San Jose, California. My big takeaway was that the humanoid robots and self-driving cars are coming faster than we realize.

Huang, who runs one of the most valuable companies on earth with a market value of $2.872 trillion, talked about synthetic data and how new models would enable humanoid robots and self-driving cars to hit the market with faster velocity.

He also noted that we’re about to shift from data-intensive retrieval-based computing to a different form enabled by AI: generative computing, where AI reasons an answer and provides the information, rather than having a computer fetch data from memory to provide the information.

I was fascinated how Huang went from subject to subject with ease, without a script. But there were moments when I needed an interpreter to tell me more context. There were some deep topics like humanoid robots, digital twins, the intersection with games and the Earth-2 simulation that uses a lot of supercomputers to figure out both global and local climate change effects and the daily weather.

Just after the keynote talk, I spoke with Dion Harris, Nvidia’s senior director of our AI and HPC AI factory solutions group, to get more context on the announcements that Huang made.

Here’s an edited transcript of our interview.

VentureBeat: Do you own anything in particular in the keynote up there?

Harris: I worked on the first two hours of the keynote. All the stuff that had to do with AI factories. Just until he handed it over to the enterprise stuff. We’re very involved in all of that.

VentureBeat: I’m always interested in the digital twins and the Earth-2 simulation. Recently I interviewed the CTO of Ansys, talking about the sim to real gap. How far do you think we’ve come on that?

Harris: There was a montage that he showed, just after the CUDA-X libraries. That was interesting in describing the journey in terms of closing that sim to real gap. It describes how we’ve been on this path for accelerated computing, accelerating applications to help them run faster and more efficiently. Now, with AI brought into the fold, it’s creating this realtime acceleration in terms of simulation. But of course you need the visualization, which AI is also helping with. You have this interesting confluence of core simulation accelerating to train and build AI. You have AI capabilities that are making the simulation run much faster and deliver accuracy. You also have AI assisting in the visualization elements it takes to create these realistic physics-informed views of complex systems.

When you think of something like Earth-2, it’s the culmination of all three of those core technologies: simulation, AI, and advanced visualization. To answer your question in terms of how far we’ve come, in just the last couple of years, working with folks like Ansys, Cadence, and all these other ISVs who built legacies and expertise in core simulation, and then partnering with folks building AI models and AI-based surrogate approaches–we think this is an inflection point, where we’re going to see a huge takeoff in physics-informed, reality-based digital twins. There’s a lot of exciting work happening.

VentureBeat: He started with this computing concept fairly early there, talking about how we’re moving from retrieval-based computing to generative computing. That’s something I didn’t notice [before]. It seems like it could be so disruptive that it has an impact on this space as well. 3D graphics seems to have always been such a data-heavy kind of computing. Is that somehow being alleviated by AI?

Harris: I’ll use a phrase that’s very contemporary within AI. It’s called retrieval augmented generation. They use that in a different context, but I’ll use it to explain the idea here as well. There will still be retrieval elements of it. Obviously, if you’re a brand, you want to maintain the integrity of your car design, your branding elements, whether it’s materials, colors, what have you. But there will be elements within the design principle or practice that can be generated. It will be a mix of retrieval, having stored database assets and classes of objects or images, but there will be lots of generation that helps streamline that, so you don’t have to compute everything.

It goes back to what Jensen was describing at the beginning, where he talked about how raytracing worked. Taking one that’s calculated and using AI to generate the other 15. The design process will look very similar. You will have some assets that are retrieval-based, that are very much grounded in a specific set of artifacts or IP assets you need to build, specific elements. Then there will be other pieces that will be completely generated, because they’re elements where you can use AI to help fill in the gaps.

VentureBeat: Once you’re faster and more efficient it starts to alleviate the burden of all that data.

Harris: The speed is cool, but it’s really interesting when you think of the new types of workflows it enables, the things you can do in terms of exploring different design spaces. That’s when you see the potential of what AI can do. You see certain designers get access to some of the tools and understand that they can explore thousands of possibilities. You talked about Earth-2. One of the most fascinating things about what some of the AI surrogate models allow you to do is not just doing a single forecast a thousand times faster, but being able to do a thousand forecasts. Getting a stochastic representation of all the possible outcomes, so you have a much more informed view about making a decision, as opposed to having a very limited view. Because it’s so resource-intensive you can’t explore all the possibilities. You have to be very prescriptive in what you pursue and simulate. AI, we think, will create a whole new set of possibilities to do things very differently.

VentureBeat: With Earth-2, you might say, “It was foggy here yesterday. It was foggy here an hour ago. It’s still foggy.”

Harris: I would take it a step further and say that you would be able to understand not just the impact on the fog now, but you could understand a bunch of possibilities around where things will be two weeks out in the future. Getting very localized, regionalized views of that, as opposed to doing broad generalizations, which is how most forecasts are used now.

VentureBeat: The particular advance we have in Earth-2 today, what was that again?

Harris: There weren’t many announcements in the keynote, but we’ve been doing a ton of work throughout the climate tech ecosystem just in terms of timetable. Last year at Computex we unveiled the work we’ve been doing with the Taiwan climate administration. That was demonstrating CorrDiff over the region of Taiwan. More recently, at Supercomputing we did an upgrade of the model, fine-tuning and training it on the U.S. data set. A much larger geography, totally different terrain and weather patterns to learn. Demonstrating that the technology is both advancing and scaling.

As we look at some of the other regions we’re working with–at the show we announced we’re working with G42, which is based in the Emirates. They’re taking CorrDiff and building on top of their platform to build regional models for their specific weather patterns. Much like what you were describing about fog patterns, I assumed that most of their weather and forecasting challenges would be around things like sandstorms and heat waves. But they’re actually very concerned with fog. That’s one thing I never knew. A lot of their meteorological systems are used to help manage fog, especially for transportation and infrastructure that relies on that information. It’s an interesting use case there, where we’ve been working with them to deploy Earth-2 and particular CorrDiff to predict that at a very localized level.

VentureBeat: It’s actually getting very practical use, then?

Harris: Absolutely.

VentureBeat: How much detail is in there now? At what level of detail do you have everything on Earth?

Harris: Earth-2 is a moon shot project. We’re going to build it piece by piece to get to that end state we talked about, the full digital twin of the Earth. We’ve been doing simulation for quite some time. AI, we’ve obviously done some work with forecasting and adopting other AI surrogate-based models. CorrDiff is a unique approach in that it’s taking any data set and super resolving it. But you have to train it on the regional data.

If you think about the globe as a patchwork of regions, that’s how we’re doing it. We started with Taiwan, like I mentioned. We’ve expanded to the continental United States. We’ve expanded to looking at EMEA regions, working with some weather agencies there to use their data and train it to create CorrDiff adaptations of the model. We’ve worked with G42. It’s going to be a region-by-region effort. It’s reliant on a couple of things. One, having the data, either the observed data or the simulated data or the historical data to train the regional models. There’s lots of that out there. We’ve worked with a lot of regional agencies. And then also making the compute and platforms available to do it.

The good news is we’re committed. We know it’s going to be a long-term project. Through the ecosystem coming together to lend the data and bring the technology together, it seems like we’re on a good trajectory.

VentureBeat: It’s interesting how hard that data is to get. I figured the satellites up there would just fly over some number of times and you’d have it all.

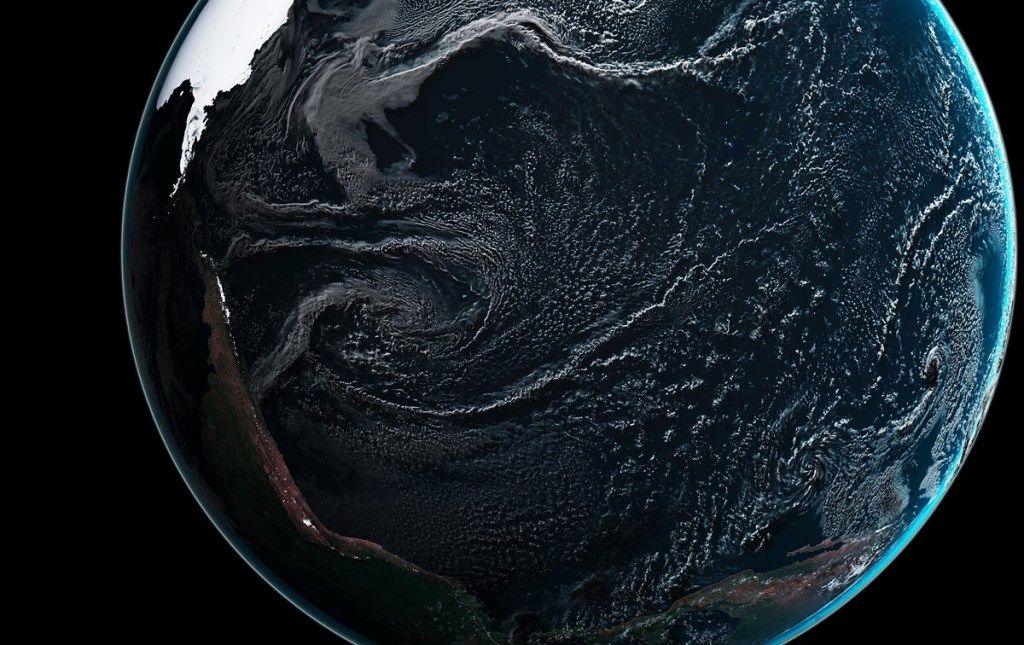

Harris: That’s a whole other data source, taking all the geospatial data. In some cases, because that’s proprietary data–we’re working with some geospatial companies, for example Tomorrow.io. They have satellite data that we’ve used to capture–in the montage that opened the keynote, you saw the satellite roving over the planet. That was some imagery we took from Tomorrow.io specifically. OroraTech is another one that we’ve worked with. To your point, there’s a lot of satellite geospatial observed data that we can and do use to train some of these regional models as well.

VentureBeat: How do we get to a complete picture of the Earth?

Harris: One of what I’ll call the magic elements of the Earth-2 platform is OmniVerse. It allows you to ingest a number of different types of data and stitch it together using temporal consistency, spatial consistency, even if it’s satellite data versus simulated data versus other observational sensor data. When you look at that issue–for example, we were talking about satellites. We were talking with one of the partners. They have great detail, because they literally scan the Earth every day at the same time. They’re in an orbital path that allows them to catch every strip of the earth every day. But it doesn’t have great temporal granularity. That’s where you want to take the spatial data we might get from a satellite company, but then also take the modeling simulation data to fill in the temporal gaps.

It’s taking all these different data sources and stitching them together through the OmniVerse platform that will ultimately allow us to deliver against this. It won’t be gated by any one approach or modality. That flexibility offers us a path toward getting to that goal.

VentureBeat: Microsoft, with Flight Simulator 2024, mentioned that there are some cases where countries don’t want to give up their data. [Those countries asked,] “What are you going to do with this data?”

Harris: Airspace definitely presents a limitation there. You have to fly over it. Satellite, obviously, you can capture at a much higher altitude.

VentureBeat: With a digital twin, is that just a far simpler problem? Or do you run into other challenges with something like a BMW factory? It’s only so many square feet. It’s not the entire planet.

Harris: It’s a different problem. With the Earth, it’s such a chaotic system. You’re trying to model and simulate air, wind, heat, moisture. There are all these variables that you have to either simulate or account for. That’s the real challenge of the Earth. It isn’t the scale so much as the complexity of the system itself.

The trickier thing about modeling a factory is it’s not as deterministic. You can move things around. You can change things. Your modeling challenges are different because you’re trying to optimize a configurable space versus predicting a chaotic system. That creates a very different dynamic in how you approach it. But they’re both complex. I wouldn’t downplay it and say that having a digital twin of a factory isn’t complex. It’s just a different kind of complexity. You’re trying to achieve a different goal.

VentureBeat: Do you feel like things like the factories are pretty well mastered at this point? Or do you also need more and more computing power?

Harris: It’s a very compute-intensive problem, for sure. The key benefit in terms of where we are now is that there’s a pretty broad recognition of the value of producing a lot of these digital twins. We have incredible traction not just within the ISV community, but also actual end users. Those slides we showed up there when he was clicking through, a lot of those enterprise use cases involve building digital twins of specific processes or manufacturing facilities. There’s a pretty general acceptance of the idea that if you can model and simulate it first, you can deploy it much more efficiently. Wherever there are opportunities to deliver more efficiency, there are opportunities to leverage the simulation capabilities. There’s a lot of success already, but I think there’s still a lot of opportunity.

VentureBeat: Back in January, Jensen talked a lot about synthetic data. He was explaining how close we are to getting really good robots and autonomous cars because of synthetic data. You drive a car billions of miles in a simulation and you only have to drive it a million miles in real life. You know it’s tested and it’s going to work.

Harris: He made a couple of key points today. I’ll try to summarize. The first thing he touched on was describing how the scaling laws apply to robotics. Specifically for the point he mentioned, the synthetic generation. That provides an incredible opportunity for both pre-training and post-training elements that are introduced for that whole workflow. The second point he highlighted was also related to that. We open-sourced, or made available, our own synthetic data set.

We believe two things will happen there. One, by unlocking this data set and making it available, you get much more adoption and many more folks picking it up and building on top of it. We think that starts the flywheel, the data flywheel we’ve seen happening in the virtual AI space. The scaling law helps drive more data generation through that post-training workflow, and then us making our own data set available should further adoption as well.

VentureBeat: Back to things that are accelerating robots so that they’re going to be everywhere soon, were there some other big things worth noting there?

Harris: Again, there’s a number of mega-trends that are accelerating the interest and investment in robotics. The first thing that was a bit loosely coupled, but I think he connected the dots at the end–it’s basically the evolution of reasoning and thinking models. When you think about how dynamic the physical world is, any sort of autonomous machine or robot, whether it’s humanoid or a mover or anything else, needs to be able to spontaneously interact and adapt and think and engage. The advancement of reasoning models, being able to deliver that capability as an AI, both virtually and physically, is going to help create an inflection point for adoption.

Now the AI will become much more intelligent, much more likely to be able to interact with all the variables that happen. It’ll come to that door and see it’s locked. What do I do? Those sorts of reasoning capabilities, you can build them into AI. Let’s retrace. Let’s go find another location. That’s going to be a huge driver for advancing some of the capabilities within physical AI, those reasoning capabilities. That’s a lot of what he talked about in the first half, describing why Blackwell is so important, describing why inference is so important in terms of deploying those reasoning capabilities, both in the data center and at the edge.

VentureBeat: I was watching a Waymo at an intersection near GDC the other day. All these people crossed the street, and then even more started jaywalking. The Waymo is politely waiting there. It’s never going to move. If it were a human it would start inching forward. Hey, guys, let me through. But a Waymo wouldn’t risk that.

Harris: When you think about the real world, it’s very chaotic. It doesn’t always follow the rules. There are all these spontaneous circumstances where you need to think and reason and infer in real time. That’s where, as these models become more intelligent, both virtually and physically, it’ll make a lot of the physical AI use cases much more feasible.

VentureBeat: Is there anything else you wanted to cover today?

Harris: The one thing I would touch on briefly–we were talking about inference and the importance of some of the work we’re doing in software. We’re known as a hardware company, but he spent a good amount of time describing Dynamo and preambling the importance of it. It’s a very hard problem to solve, and it’s why companies will be able to deploy AI at large scale. Right now, as they’ve been going from proof of concept to production, that’s where the rubber is going to hit the road in terms of reaping the value from AI. It’s through inference. A lot of the work we’ve been doing on both hardware and software will unlock a lot of the virtual AI use cases, the agentic AI elements, getting up that curve he was highlighting, and then of course physical AI as well.

Dynamo being open source will help drive adoption. Being able to plug into other inference runtimes, whether it’s looking at SGLang or vLLM, it’s going to allow you to have much broader traction and become the standard layer, the standard operating system for that data center.

Comments are closed, but trackbacks and pingbacks are open.